One of the first things that we used to do to resolve a substantial part of load issues caused by a Joomla website was to switch the main content tables from MySQL InnoDB database engine to MySQL MyISAM. The results were always impressive. Nevertheless, we always had conflicting feelings after the switch: while we were happy that the load was down, we knew that we were playing with fire and we knew that there’s a way to make InnoDB work on a very large Joomla database, but we decided to play re-actively on this issue, and not proactively.

The reason why we knew that we were playing with fire is the fact that MyISAM locks the whole table when inserting, deleting, or updating rows, which means that any subsequent CRUD (Create, Read/Retrieve, Update, Delete) activity will have to wait for the lock to be released to get executed. Of course, the locking time is typically very small, but things can get very ugly very quickly when a massive update to a critical table takes place, and so all the queries needing that table will have to wait for a long time, creating an unmanageable backlog of queries, ultimately causing the server load (caused by MySQL) to skyrocket.

The nice thing about InnoDB is that, unlike MyISAM, it only locks the row(s) it is updating/adding/deleting, essentially reducing any lock time to nil. But, for the life of us, we were unable to get InnoDB play well on large Joomla websites powered by large databases.

A few weeks ago, however, everything changed. We started seeing some minor performance issues on a large Joomla website that we manage. We ssh’d to the server and we noticed that the load was hovering around 3, which is not bad, but not good either. A load of around 3, when the main load is caused by MySQL (and not by other processes), typically hints that there are some slowdowns on the website. So, we optimized the website even more (we performed many core optimizations on that particular website before), but the outcome wasn’t great. We were frustrated, and we knew that we have reached the physical limit of MyISAM – but we were just afraid of jumping into the abyss.

Eventually, we decided to take that leap of faith, because, as mentioned in the beginning of this post, we knew that InnoDB would perform much much better than MyISAM provided the right database configuration settings were chosen. We were so determined to making this whole thing a success that we even switched to a better server that had double the memory and a faster SSD. Here are the server details (note that the website in question receives 50,000 uniques/day, and, believe it or not, the System – Cache plugin is disabled on that website):

Processor: Intel Xeon E5-2650 v3 10 Cores

Memory: 64GB DDR4 SDRAM 0.00

Primary Hard Drive: 6 x 500GB Crucial SSD Hardware RAID 10

Secondary/Backup Hard Drive: 1TB SSD Backup Drive 0.00

Linux OS: CentOs 6 - 64Bit 0.00

Control Panel: cPanel / WHM - Fully Managed

Since we were switching to InnoDB, we cared mostly about 3 things: more RAM, a fast SSD drive, and a fast processor (in that order). The RAM was doubled (the client had 32 GB before), the SSD drive was slightly faster, and the processor was much faster (despite the fact that the client had a 48 core AMD processor before).

After moving to the new server, we noticed that not much has changed, the load dropped slightly and hovered around 2.8 (which was still not good) and the (unfixable) slow queries were still being recorded to the slow query log. It was definitely a good time to test the power of InnoDB to see whether it solves the problem or not.

How we switched from MyISAM to InnoDB

Switching from MyISAM to InnoDB consisted of 3 steps:

- Modifying the MySQL configuration file to ensure InnoDB runs optimally.

-

Restarting the MySQL server.

-

Switching all the database tables from MyISAM to InnoDB.

Of course, the most difficult part was finding the right InnoDB configuration settings for that particular website, and after a lot of very hard work, we found them! Here they are:

innodb_buffer_pool_size = 8G

innodb_log_file_size = 2G

innodb_log_buffer_size = 256M

innodb_flush_log_at_trx_commit = 0

innodb_flush_method = O_DIRECT

After determining the above values, we added them to the my.cnf file in the /etc folder.

Of course, the million dollar question (if you’re reading this post in the year 2100 then please change million to billion for accuracy) is, how to determine the above values? While we know that you are not going to give us a million dollars for our answer (or is it that the one who asks the question gets the million dollar? We’re not really sure!) we are going to explain how we got the above values for you anyway:

innodb_buffer_pool_size is probably the most important value here. InnoDB loads all the tables in the RAM, and so when a query hits an InnoDB table, the database engine will check if that table is already in the RAM, if it is, then it queries the table from the RAM (and not from the disk), if it isn’t, then it loads it in the RAM, and then queries it from there. innodb_buffer_pool_size, as you may have guessed, is the maximum amount of RAM that can be used by InnoDB for table storage – if InnoDB reaches that maximum, then it’ll start querying tables directly from the disk, which is a much slower process. Most references state that the amount of RAM allocated to innodb_buffer_pool_size should be 80% of the total RAM, but we think this is just too much. Additionally, these references assume that the database server runs on a different physical server, which is not the case for the absolute majority of our clients (they have the database server, the web server, and the mail server, all running on the same physical server). Since innodb_buffer_pool_size will contain, at max, all the data in all the databases, then an intelligent way to determine its value would be by calculating the size of the /var/lib/mysql folder by issuing the following command in the shell:

du /var/lib/mysql -ch | grep total

In our case, the above returned 2.4 G, so we just multiplied it by 3 and we rounded it, and we came up with 8 G (8 Gigabytes). Of course, even 3 G could have worked for us, but we just didn’t want to take any chances and we had a lot of RAM. Don’t be frugal with this value as it’s the most critical in an InnoDB environment.

innodb_log_file_size is the size of the physical logs where all the recent database activities are cached for both performance reasons and to restore the database after a crash. innodb_log_file_size is a bit more complex that innodb_buffer_pool_size to accurately determine (make that highly complex). But, a good rule of thumb that works well for most database instances it to set this value to 25% of the innodb_buffer_pool_size, which is in our case 2 G.

Of course, you might be asking yourself, why not just set the innodb_log_file_size to a very high number and then forget about it? Well, you shouldn’t do that because of 2 reasons:

- Restarting MySQL will take a very long time. In fact, when we restarted MySQL with innodb_log_file_size changed to 2 G, we had to wait for about 3 minutes (when the value was set at about 256M, restarting the server took just a few seconds), this is because MySQL had to write 2 files of 2 G each to the fileystem: ib_logfile0 and ib_logfile1 (now you know what these weird ib_logfiles files under the /var/lib/mysql folder are).

-

Restoring a crashed database will also take a lot of time. Keep in mind that a crashed database is not a remote possibility, and we’ve seen it happen many times (almost all cases were caused by a power outage).

innodb_log_buffer_size is the size of the RAM that InnoDB can write the transactions to before committing them. Honestly, a value of 64 M is more than enough for almost any MySQL database server (most MySQL servers have it set to 8 M), but we changed it to 256 M when experimenting and we didn’t change it back as a large innodb_log_buffer_size has zero negative effect on the server. In all fairness, probably a value of 1 M could have worked for us because we’re not even using database transactions anywhere on the Joomla website (see below).

innodb_flush_log_at_trx_commit is (more or less) an on/off setting for database transactions (when set to 1, it means we are allowing database transactions, when set to 0, it means we are not allowing database transactions). The absolute majority of Joomla websites out there don’t use database transactions (for clarification, a database transaction is completely different from a payment transaction), which means that this should be set to zero (0). The default value of 1 means that the InnoDB database engine is ACID (Atomicity, Consistency, Isolation, Durability) compliant (meaning that the transactions are atomic), which creates a significant overhead on the MySQL database server (even when it’s not needed).

innodb_flush_method is a setting to tell MySQL which method to use to flush (delete) the database data (not actual data, but the cached data) and logs. If you’re using RAID, then set it to O_DIRECT. Otherwise, leave it empty.

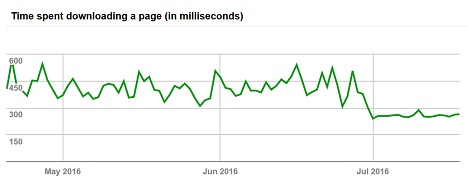

After applying the above settings in the my.cnf file, restarting the MySQL server, and then changing all the tables from MyISAM to InnoDB, we saw a huge improvement in the performance: the load dropped to slightly over 1, and the slow query log stopped recording slow queries every few minutes (in fact, we only had 3 slow queries since we did the switch 3 weeks ago). Even Google saw a substantial improvement, see for yourself:

As you can see, the time spent downloading a page has decreased significantly as of June 30th (which is when we switched to InnoDB). One can safely say that the download time was halved! Impressive, huh?

We hope that you found this post useful. If you have need help with the implementation, then you can always contact us. Our rates are super affordable, our work is super clean, and we will make your Joomla website much, much faster!